Lovegrove Mathematicals

"Dedicated to making Likelinesses the entity of prime interest"

Lovegrove Mathematicals

"Dedicated to making Likelinesses the entity of prime interest"

This part of the site is about the algebraic properties of entities we shall call likelinesses. These can be thought of as best-estimates of probabilities, but we shall be defining them in the abstract without using the concepts of probability or best-estimates. In fact, having defined "likeliness", we shall then use it to define "probability" (or, at least, our concept of probability -a replacement for the frequentist probability), rather than the other way round.

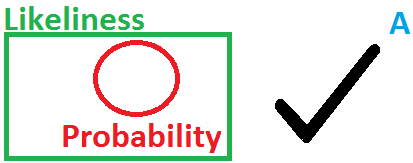

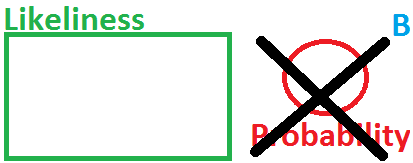

So, probabilities will be a special case of likelinesses or -to put it the other way round- likelinesses are generalisation of probabilities. The relationship between the two is as in Fig A, not Fig B.

Taking this approach means that likelinesses become a fundamental abstract entity in their own right, rather than being simply an approximation to something else.

The main reason I am interested in this is to eliminate the confusion which often exists between probabilities and likelinesses. For example, the Law of Succession gives the likeliness, not the probability. Other examples are:-